Predictive maintenance is one of the most frequently mentioned aspects of digitalization in industrial environments. On the one hand, it is seemingly worshipped as a cure-all remedy with which enormous economies can be realized while on the other, the Industry 4.0 Index confirms that predictive maintenance continues to fall well short of expectations. There is evidently a huge gap here between promises and actual performance. Why is that?

The ARC Advisory Group estimates the loss to the process industry due to unscheduled downtime to be in the region of 20 billion dollars. The costs for unplanned outages are reckoned to be ten times as high as they are for planned maintenance stoppages. In other words, a reliable maintenance strategy clearly has benefits. Many companies have long implemented such strategies for this reason. So that for them the hype is nothing more than a hollow phrase for something which is already well-established. Moreover, different definitions are employed of the key terms. And the transition between the data based maintenance levels condition monitoring and predictive maintenance is blurred. The real value added by data based maintenance concepts therefore has to be visible in order to live up to the hype.

A bigger role

That isn’t easy because age-related equipment failures only account for a small fraction of the total. Process-related failures, and especially operator errors, play a far bigger role. All of this results in random failure patterns that are difficult to predict. It is no longer enough to monitor just a few – or fewer – trends. However, the use of more complex solutions based on advanced data analysis methods is in many cases still in its infancy. The new IIoT sensors which are currently being developed must be provided with standardized interfaces; relevant internal device data must be accessible, concepts like NOA must mature, and last but not least assistance systems must exist for operators to restrict human error to a minimum. The list essential prerequisites for achieving added value can be expanded at will.

Example: Cavitation

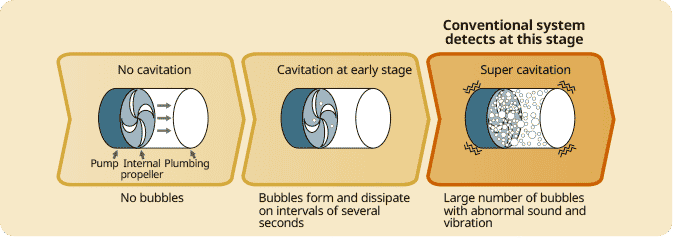

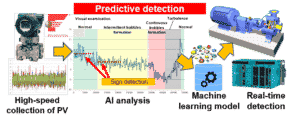

Cavitation is a good example of process-related damage. If the bubbles which can occur under certain conditions in pumps implode and collapse, there is a risk of serious damage to the materials. Cavitation is accompanied by severe vibration and a loud noise. This can be measured using suitable sensors and are hence useful parameters for detecting cavitation. Unfortunately, though, the cavitation will already be so far advanced that damage cannot be ruled out.

This in turn presupposes a robust evaluation algorithm. So that a resilient signal which enables cavitation to be detected can be produced from noisy measurements. This is where machine learning algorithms which are capable of mastering precisely these requirements come into play. Their use allows much earlier advance warning of cavitation as soon as the first bubbles start to form.

The time saving of up to 10 minutes compared to vibration and sound-inducing cavitation is sufficient to permit countermeasures to be taken in good time. The likelihood of cavitation damage is minimized. And the maintenance intervals can be extended accordingly. What’s more, the deterioration in the pump’s efficiency and delivery head which generally accompanies cavitation is avoided.

Maintenance is only one small building block

Maintenance is only one small building block

The ability to detect cavitation in this way may only be one small building block in a comprehensive predictive maintenance concept. Yet it shows how much value can be added by using smart algorithms rather than conventional methods. It also demonstrates the close connection between maintenance and process technology. For this reason, maintenance is only ever one element of an overall concept which sheds light on unplanned downtime from all possible angles. Digital transformation is creating new opportunities and this kind of concept will be forced to grow continuously to match. More than anything else, though, simply consolidating the necessary data and information will once again mean breaking down silos.

Design thinking – tackling digitalisation with help from your users

Maintenance is only one small building block

Maintenance is only one small building block